Last year in the Windows 10 Fall Creators Update, we introduced Eye Control, an experience that allows customers to control Windows using only their eyes. We continue to design with individuals like Steve Gleason in mind, and always challenge ourselves to build products that create positive change. We understand, however, that fulfilling our mission statement – “to empower every person and organization on the planet to achieve more” – goes beyond the product alone. It starts with something more foundational: building a platform to empower all developers to create products and experiences that can help improve people’s lives and make a positive impact.

Today, we are excited to share the next step in our eye tracking journey. In addition to sharing improvements to Eye Control and broader eye tracker hardware support, we are announcing Windows eye tracking APIs and open-source code to enable app developers to build more accessible and immersive app experiences with eye tracking.

Let’s dive into the details.

New features for Eye Control

Since the launch of Eye Control, we have continued to work with Microsoft Research and people living with ALS (amyotrophic lateral sclerosis) or MND. We’ve listened to customer feedback to help make the product better for people with ALS, and for people everywhere who can benefit from using their eyes to control Windows. This feedback has influenced the next set of features we have added to Eye Control, advancing another step towards being in full control of your PC using only your eyes. Here are the three areas we are updating based on this feedback and collaboration:

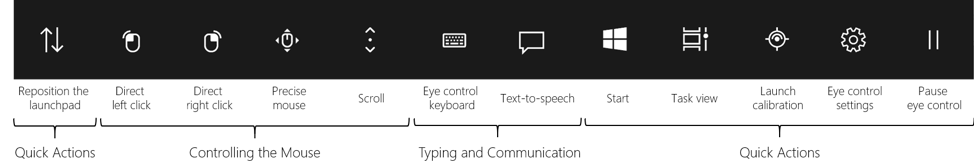

- Navigate more easily: We have added more ways to better control the mouse, like the ability to scroll any app or website, as well as the ability to more quickly click content.

- Get there quick: Accelerate to common tasks with quick access to Start, Task View, device calibration, and Eye Control settings, all quickly accessible from the Eye Control launchpad.

- Pause when you need to: Pause the launchpad when watching a movie, reading, or taking a break, to help avoid accidental interactions with your eyes

Eye Control launchpad in Windows 10 April 2018 Update

Eye Control is still in preview, and your feedback can help make it better. If you have feedback on how we can improve our products and services, you can use the Accessibility User Voice Forum or the Windows Feedback Hub. For help with Eye Control, contact the Disability Answer Desk.

Broader eye tracking hardware support

Last year, we announced our collaboration with Tobii to bring eye tracking hardware support to Windows on their range of accessibility and gaming eye trackers. Today, we are announcing our second eye tracking partner, EyeTech, to bring support to Windows as well.

Our goal is to support a breadth of eye tracking hardware on Windows, so customers can pick the device that works best for their needs and preferences. All of these eye tracking devices will work with Eye Control and the Windows eye tracking APIs so developers can build an app once and have it work with all supported hardware. We are continuing to work with the hardware ecosystem and hope to have additional partners to announce later this year. We look forward to seeing more eye tracker vendors bring broader hardware support to customers.

Introducing new Windows eye tracking APIs

On top of the Eye Control improvements and broader eye tracking hardware support, we are excited to introduce new platform tools to empower all developers to be a part of the eye tracking journey.

The Windows 10 April 2018 Update includes Windows eye tracking APIs – the same set of APIs we use to build Eye Control. These APIs provide a stream of eye tracking data that indicates where the user is looking within the bounds of the application’s window. We are also releasing an open-source Gaze Interaction Library in the Windows Community Toolkit to make it even easier to integrate gaze interactions into your UWP apps. We are starting to collaborate with partners like Tobii and EyeTech on this open-source library, and we welcome contributions from the eye tracking developer community.

We are already starting to see partners put our Windows APIs into action for accessibility apps. One great example is Snap + Core First by Tobii Dynavox, a symbol-based communication app to help more people easily communicate. We are also seeing eye tracking interactions create richer and more immersive gaming experiences. And with the support of UWP integration with Unity, you can leverage these APIs in your new or existing UWP Unity game.

With the release of these APIs, we are making sure customers are in control of their privacy. The first time an app needs to access a customer’s eye tracker, it requests their permission. Additionally, an app can only get access to eye tracking data that is within the app. Remember, if your app collects, stores or transfers eye tracking data, you must describe this in your app’s privacy statement. You can learn more about how we handle privacy on our eye tracking privacy page.

Start developing!

We look forward to working with developers to enable a rich ecosystem of apps and experiences with eye tracking, and are excited to see what you all create. Here are some resources to help get started:

Documentation

- Walkthrough of the Windows eye tracking APIs

- Walkthrough of the Gaze Interaction Library

- Getting started with UWP & Unity

API Reference / Code

We will be at Build showcasing these APIs, eye tracking hardware, Eye Control, and apps & experiences. If you are attending Build, please come find our booth to talk to us and learn more! For more on this and accessibility @ Microsoft in general, please check out www.microsoft.com/accessibility.

Source: Windows Blog

—