Unlock a new era of innovation with Windows Copilot Runtime and Copilot+ PCs

I am excited to be back at Build with the developer community this year.

Over the last year, we have worked on reimagining Windows PCs and yesterday, we introduced the world to a new category of Windows PCs called Copilot+ PCs.

Copilot+ PCs are the fastest, most intelligent Windows PCs ever with AI infused at every layer, starting with the world’s most powerful PC Neural Processing Units (NPUs) capable of delivering 40+ TOPS of compute. The new class of PCs is up to 20 times more powerful1 and up to 100 times as efficient2 for running AI workloads compared to traditional PCs. This is a quantum leap in performance, made possible by a quantum leap in efficiency. The NPU is part of a new System on Chip (SoC) that enables the most powerful and efficient Windows PCs ever built, with outstanding performance, incredible all day battery life, and great app experiences. Copilot+ PCs will be available in June, starting with Qualcomm’s Snapdragon X Series processors. Later this year we will have more devices in this category from Intel and AMD.

I am also excited that Qualcomm announced this morning its Snapdragon Dev Kit for Windows which has a special developer edition Snapdragon X Elite SoC. Featuring the NPU that powers the Copilot+ PCs, the Snapdragon Dev Kit for Windows has a form factor that is easily stackable and is designed specifically to be a developer’s everyday dev box, providing the maximum power and flexibility developers need. It is powered by a 3.8 GHz 12 Core Oryon CPU with dual core boost up to 4.3GHz, comes with 32 GB LPDDR5x memory, 512GB M2 storage, 80 Watt system architecture, support for up to 3 concurrent external displays and uses 20% ocean-bound-plastic. Learn more.

This new class of powerful next generation AI devices is an invitation to app developers to deliver differentiated AI experiences that run on the edge, taking advantage of NPUs that offer the benefits of minimal latency, cost efficiency, data privacy, and more.

As we continue our journey into the AI era of computing, we want to give Developers who are at the forefront of this AI transformation the right software tools in addition to these powerful NPU powered devices to accelerate the creation of differentiated AI experiences to over 1 billion users. Today, I’m thrilled to share some of the great capabilities coming to Windows, making Windows the best place for your development needs.

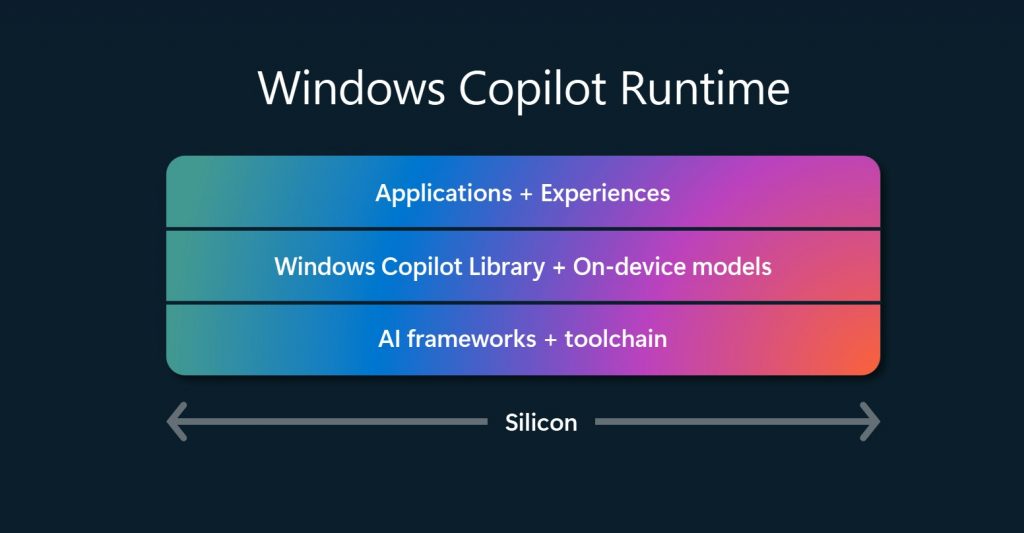

- We are excited to extend the Microsoft Copilot stack to Windows with Windows Copilot Runtime. We have infused AI into every layer of Windows, including a fundamental transformation of the OS itself to enable developers to accelerate AI development on Windows.

- Windows Copilot Runtime has everything you need to build great AI experiences regardless of where you are on your AI journey – whether you are just getting started or already have your own models. Windows Copilot Runtime includes Windows Copilot Library which is a set of APIs that are powered by the 40+ on-device AI models that ship with Windows. It also includes AI frameworks and toolchains to help developers bring their own on-device models to Windows. This is built on the foundation of powerful client silicon, including GPUs and NPUs.

- We are introducing Windows Semantic Index, a new OS capability which redefines search on Windows and powers new experiences like Recall. Later, we will make this capability available for developers with Vector Embeddings API to build their own vector store and RAG within their applications and with their app data.

- We are introducing Phi Silica which is built from the Phi series of models and is designed specifically for the NPUs in Copilot+ PCs. Windows is the first platform to have a state-of-the-art small language model (SLM) custom built for the NPU and shipping inbox.

- Phi Silica API along with OCR, Studio Effects, Live Captions, Recall User Activity APIs will be available in Windows Copilot Library in June. More APIs like Vector Embedding, RAG API, Text Summarization will be coming later.

- We are introducing native support for PyTorch on Windows with DirectML which allows for thousands of Hugging Face models to just work on Windows.

- We are introducing Web Neural Network (WebNN) Developer Preview to Windows through DirectML. This allows web developers to take advantage of the silicon to deliver performant AI features in their web apps and can scale their AI investments across the breadth of the Windows ecosystem.

- We are introducing new productivity features in Dev Home like Environments, improvements to WSL, DevDrive and new updates to WinUI3 and WPF to help every developer become more productive on Windows.

I can’t wait to share more with you during our keynote today, be sure to register for Build and tune in!

Introducing Windows Copilot Runtime to provide a powerful AI platform for developers

We want to democratize the ability to experiment, to build, and to reach people with breakthrough AI experiences. That’s why we’re committed to making Windows the most open platform for AI development. Building a powerful AI platform takes more than a new chip or model, it takes reimagining the entire system, from top to bottom. The new Windows Copilot Runtime is that system. Developers can take advantage of Windows Copilot Runtime in a variety of ways, from higher level APIs that can be accessed via simple settings toggle, all the way to bringing your own machine learning models. It represents the end-to-end Windows ecosystem:

- Applications and Experiences created by Microsoft and developers like you across Windows shell, Win32 Apps and Web apps.

- Windows Copilot Library is the set of APIs powered by the 40+ on-device models that ship with Windows. This includes APIs and algorithms that power Windows experiences and are available for developers to tap into.

- AI frameworks like DirectML, ONNX Runtime, PyTorch, WebNN and toolchains like Olive, AI Toolkit for Visual Studio Code and more to help developers bring their own models and scale their AI apps across the breadth of the Windows hardware ecosystem.

- Windows Copilot Runtime is built on the foundation of powerful client silicon, including GPUs and NPUs.

New experiences built using the Windows Copilot Runtime

Windows Copilot Runtime powers the creation of all experiences you build, and what we – Windows – build for our end-users. Using a suite of APIs and on-device models in Windows Copilot Library, we have built incredible first-party experiences like

- Recall that helps users instantly find almost anything3 they’ve seen on their PC

- Cocreator4 a collaborative AI image generator that helps users bring their ideas to life using natural language and ink strokes locally on the device

- Restyle Image, helps users reimagine their personal photos with a new style combining image generation and photo editing in Photos

- Others like Windows Studio Effects, and Live captions, with real-time translation from video and audio in 40+ languages into English subtitles

We are also partnering with several third-party developers on apps like Davinci Resolve, CapCut, WhatsApp, Camo Studio, djay Pro, Cephable, LiquidText, Luminar Neo and many more that are leveraging the NPU to deliver innovative AI experiences with reduced latency, faster task completion, enhanced privacy and lower cloud compute costs. We’re excited for developers to take advantage of the NPU and Windows Copilot Runtime and invent new experiences.

Windows Copilot Library offers a set of APIs helping developers to accelerate local AI development

Windows Copilot Library has a set of APIs that are powered by the 40+ on-device AI models and state-of-the-art algorithms like DiskANN, built into Windows. Windows Copilot Library consists of ready-to-use AI APIs like Studio Effects, Live captions translations, OCR, Recall with User Activity, and Phi Silica, which will be available to developers in June. Vector Embeddings, Retrieval Augmented Generation (RAG), Text Summarization along with other APIs will be coming later to Windows Copilot Library. Developers will be able to access these APIs as part of the Windows App SDK release.

Developers can take advantage of the Windows Copilot Library with no-code effort to integrate Studio Effects into their apps like Creative filters, Portrait light, Eye contact teleprompter, Portrait blur, and Voice focus. WhatsApp among others has already upgraded their user experience adding Windows Studio Effects controls directly into the UI. Learn more.

With a similar no-code effort, developers can take advantage of Live captions, the translation feature in Windows to caption audio and video in real time and translate into preferred language in apps.

Developers can tap into the newly announced Recall feature on Copilot+ PCs. Enhance the user’s Recall experience with your app by adding contextual information to the underlying vector database via the User Activity API. This integration helps users pick up where they left off in your app, improving app engagement and user’s seamless flow between Windows and your app. Edge and M365 apps like Outlook, PowerPoint and Teams have already extended their apps with Recall. Concepts, a 3rd party sketching app is an early example – if launched from Recall, it brings users immediately to the exact canvas location in the right document, and even the same zoom level seen in the Recall timeline.

Introducing Windows Semantic Index that redefines search on Windows. Vector Embeddings API offers the capability for developers to build their own vector store with their app data

Recall database is powered by Windows Semantic Index, a new OS capability that redefines search on Windows. Recall is grounded in several state-of-the-art AI models, including multi-modal SLMs, running concurrently and integrated into the OS itself. These models understand different kinds of content and work across several languages, to organize a vast sea of information from text to image to videos, in Windows. This data is transformed and stored in a vector store called Windows Semantic Index. The semantic index is stored entirely on the user’s local device and accessible through natural language search. This deep integration allows a uniquely robust approach to privacy as the data does not leave the local device.

To help developers bring the same natural language search capability in their apps, we are making Vector Embeddings and RAG API available in Windows Copilot Library later. This will enable developers to build their own semantic index store with their own app data and this combined with Retrieval Augmented Generation (RAG) API, developers can bring natural language search capability in their apps. This is a great example of how we are building new features using the models and APIs in Windows Copilot Runtime and offering the same capability for developers to do so in their apps.

The APIs in the Windows Copilot Library cover the full spectrum from low-code APIs to sophisticated pipelines to fully multi-modal models.

Windows is the first platform to have a state-of-the-art SLM shipping inbox and Phi Silica is custom built for the NPUs in Copilot+ PCs

We recently introduced Phi-3 the most capable and cost-effective SLM. Phi-3-mini does better than models twice its size on key benchmarks. Today we are introducing Phi Silica, built from the Phi series of models. Phi Silica is the SOTA (state of the art) SLM included out of the box and is custom built for the NPUs in Copilot+ PCs. With full NPU offload of prompt processing, the first token latency is at 650 tokens/second – and only costs about 1.5 Watts of power while leaving your CPU and GPU free for other computations. Token generation reuses the KV cache from the NPU and runs on the CPU producing about 27 tokens/second.

These are just a few examples of the APIs available to developers in the Windows Copilot Library. As new models and new libraries come to Windows, the possibilities will only grow. We want to make it easy for developers to bring powerful AI features into their apps, and Windows Copilot Library is the perfect place to start.

We consistently ensure Windows AI experiences are safe, fair, and trustworthy, following our Microsoft Responsible AI principles. When developers extend their apps with Windows Copilot Library, they automatically inherit those Responsible AI guardrails.

Developers can bring their own models and scale across breadth of Windows hardware powered by DirectML

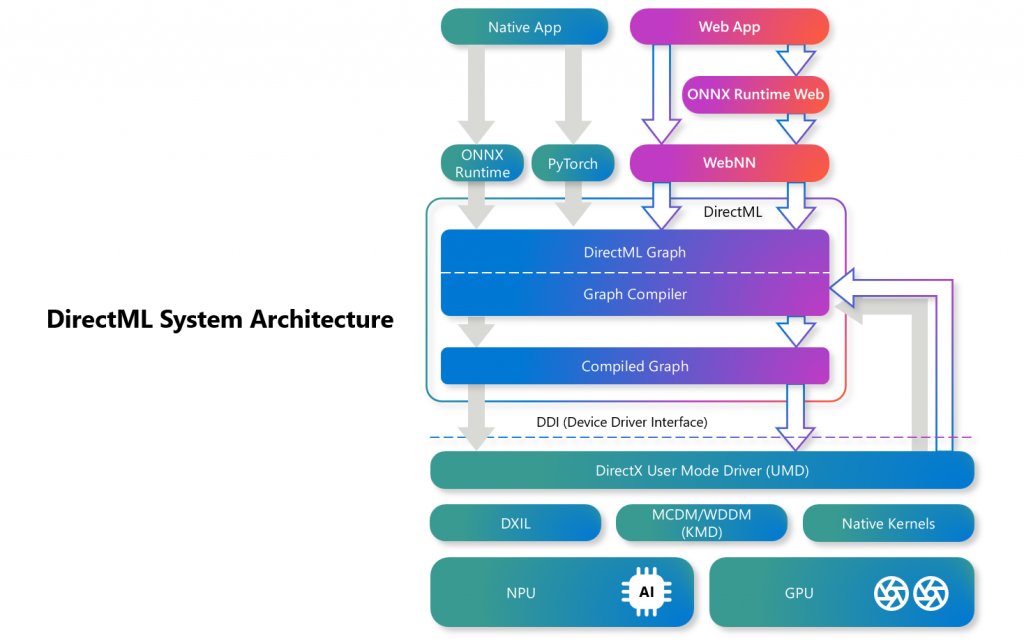

While the models that ship with Windows 11 power a wide range of AI experiences, many developers will want to bring their own models to Windows to power their applications. As an open platform, Windows supports a diverse silicon ecosystem, and Windows has simplified optimizing models across silicon with DirectML. Just like DirectX is for Graphics, DirectML is the high-performance low-level API for machine learning in Windows.

DirectML abstracts across the different hardware options our Independent Hardware Vendor (IHV) partners bring to the Windows ecosystem, and supports across GPUs and NPUs, with CPU integration coming soon. It integrates with relevant frameworks, such as the ONNX Runtime, PyTorch and WebNN.

PyTorch is now natively supported on Windows with DirectML

We know that a lot of developers do their PyTorch development on Windows. So, we’re thrilled to announce that Windows now natively supports PyTorch through DirectML. Native PyTorch support means that thousands of Hugging Face models will just work on Windows. Not just that – we’re collaborating with Nvidia to scale these development workflows to over 100M RTX AI GPUs.

PyTorch support on GPUs is available starting today, with NPU support coming soon. Learn more

We recognize that many developers start with web apps today. Web apps should also be able to take advantage of silicon on local devices to deliver AI experiences to users.

DirectML now supports web apps that can take advantage of silicon to deliver AI experiences powered by WebNN

From native to web applications, DirectML now brings local AI scale across Windows for the web through the new WebNN Developer Preview. WebNN, an emerging web standard for machine learning, powered by DirectML and ONNX Runtime Web, simplifies how developers can leverage the underlying hardware on their user’s device for their web apps to deliver AI experiences at near native performance for tasks such as generative AI, image processing, natural language processing, computer vision and more. This WebNN Developer Preview supports GPUs with broader accelerator coverage to include NPU coming soon. Learn more about how to get started with WebNN.

High-performance inferencing on Windows with ONNX Runtime and DirectML

Microsoft’s ONNX Runtime builds on the power of the open-source community to enable developers to ship their AI models to production with the performance and cross-platform support they need. ONNX Runtime with DirectML applies state-of-the-art optimizations to get the best performance for all generative AI models like Phi, Llama, Mistral, and Stable Diffusion. With ONNX Runtime, developers can extend their Windows applications to other platforms like web, cloud or mobile, wherever they need to ship their application on. ONNX Runtime is how Microsoft apps like Office, Visual Studio Code, and even Windows itself ship their AI to run on-device. Learn More.

DirectML helps scale your efforts across the Windows ecosystem – whether you are building your own models or you want to bring an open-source model from Hugging Face, and whether you are building a native Windows app or a web app.

DirectML is generally available across all Windows GPUs. DirectML support on Intel® Core™ Ultra processors with Intel® AI Boost is available as a Developer Preview with GA coming soon, and Qualcomm® Hexagon™ NPU in the Snapdragon X Elite SoC is coming soon. Stay tuned for more DirectML features that will simplify how developers can differentiate with AI and scale their innovations across Windows. Grab your favorite model and get started with DirectML today at DirectML Overview or Windows AI Dev Center | Microsoft Developer

Windows Subsystem for Linux (WSL) offers a robust platform for AI development on Windows by making it easy to run Windows and Linux workloads simultaneously. Developers can easily share files, GUI apps, GPU and more between environments with no additional setup. WSL is now enhanced to meet the enterprise grade security requirements so enterprise customers can confidently deploy WSL for their developers to take advantage of both Windows and Linux operating systems on the same Windows device and accelerate AI development efficiently.

WSL now incorporates two new Zero Trust features, Linux Intune Agent and Integration with Microsoft Entra ID, to enable system administrators to enhance enterprise security. With Linux Intune agent integration, IT admins and administrators can determine compliance based on WSL distro versions and more, using custom scripts. Microsoft Entra ID integration provides a zero trust experience to access protected enterprise resources from within a WSL distro by providing a secure channel to acquire and utilize tokens bound to the host device. The Linux Intune agent integration is currently in public preview, and Microsoft Entra ID integration will be in public preview this summer.

New experiences designed to help every developer become more productive on Windows 11

We know building great AI experiences starts with developer productivity. That’s why we are excited to announce new features in Dev Home, performance improvements to DevDrive and improvements to your favorite tool PowerToys.

At Build last year, we announced Dev Home and since then we have been evolving Dev Home to be the one-stop-shop for setting up your Windows machine for development. We have made some key improvements to Dev Home to further boost developer productivity. Dev Home is now installed on every Windows machine making it easy to get started. We are introducing Environments, Windows Customization and welcoming WSL and a subset of PowerToys utilities to Dev Home.

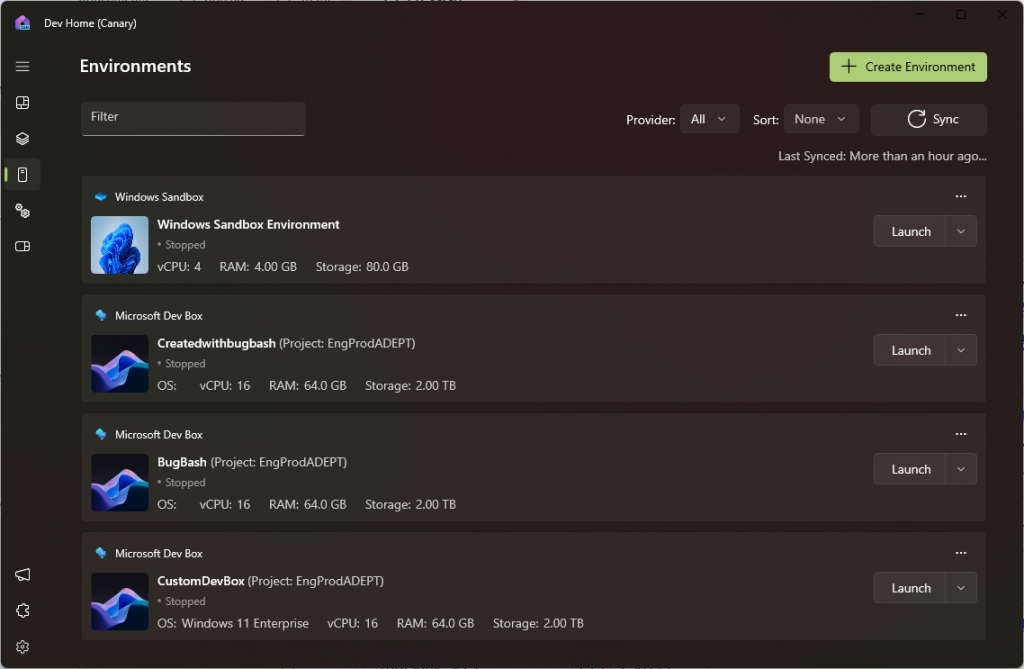

Environments in Dev Home help centralize your interactions with all remote environments. Create, manage, launch and configure dev environments in a snap from Dev Home

For developers who often use virtual machines and remote environments, Environments in Dev Home is for you. With support for Hyper-V VMs and cloud Microsoft Dev Boxes, you can create new environments, set up environments with repositories, apps, and packages. You can perform quick actions such as taking snapshots, starting, and stopping, and even pin environments to the Start Menu and taskbar, all from Environments in Dev Home. To make this experience even more powerful, it’s all extensible and open source so you can add your own environments. Environments in Dev Home is available now in preview.

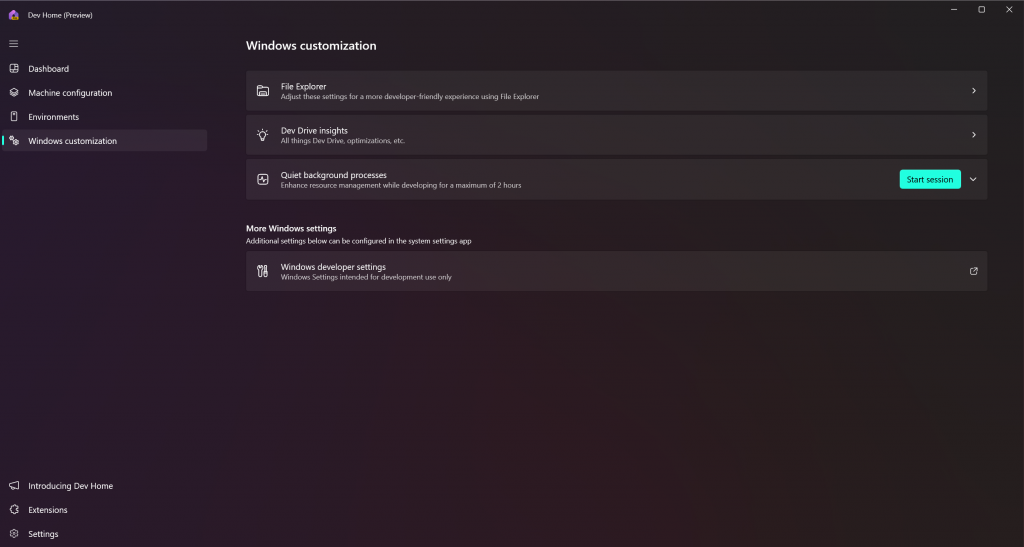

We know developers want zero distractions when coding, and customizing your dev machine to the ideal state is critical for productivity. We also know developers want more control and agency on their device. That’s why we are releasing Windows Customization feature in Dev Home.

Windows Customization in Dev Home allows developers to customize their device to an ideal state with fewest clicks

Windows Customization gives developers access to Dev Drive insights, advanced File Explorer settings, virtual machine management, and the ability to quiet background processes, giving developers more control over their Windows machine. Submit feature requests for what you want to see in Windows Customization on GitHub.

New Export feature in Dev Home Machine Configuration allows you to quickly create configuration files to share with your teammates, boosting productivity

WinGet configuration files are an easy way to get your machine set up for development exactly how you like it. For a streamlined experience, try the new export feature in Dev Home which allows you to generate a configuration file based on the choices you made in Dev Home’s Machine Configuration setup flow, allowing you to quickly create configuration files to share with your teammates for a consistent machine setup.

Lastly, when cloning a repository in Dev Home that contains a configuration file, Dev Home can now detect that file and let you run it right away, allowing you to get set up for coding even faster than before.

In addition to these new features, we are bringing WSL and a subset of PowerToys utilities to Dev Home, truly making Dev Home your one-stop shop for all your development needs. You can now access WSL right from Dev Home in the Environments tab. Also, a subset of PowerToys utilities such as Hosts File Editor, Environment Variables, and Registry Preview can be accessed in the new Utilities tab on Dev Home. These features are currently available in preview.

Dev Drive introduces block cloning that will allow developers to perform large file copy operations, instantaneously

At the heart of developer productivity lies improving performance for developer workloads on Windows. Last year at Build, we announced Dev Drive a new storage volume tailor-made for developers and supercharged for performance and security. Since then, we have continued to invest further in Windows performance improvements for developer workloads.

With the release of Windows 11 24H2, workflows will get even faster when developing on a Dev Drive. Windows copy engine now has Filesystem Block Cloning, resulting in nearly instantaneous copy actions and drastically improving performance, especially in developer scenarios that copy large files. Our benchmarks include the following:

| File(s) Copied | NTFS | Dev Drive w/ Block Cloning |

% Improvement |

| 10GB file | 7s 964ms | 641ms | 92% |

| 1GB file | 681ms | 38ms | 94% |

| 1MB file | 11ms | 9ms | 18% |

| 18GB folder(5815 files) | 30s 867ms | 6s 306ms | 80% |

Dev Drive is a must for any developer, especially if you are dealing with repositories with many files, or large files. You can set up Dev Drive through the Settings app under System->Storage->Disks and Volumes page.

Reducing toil and unlocking the fun and joy of development on Windows with new features and improvements

Sudo for Windows allows developers to run elevated commands right in Terminal

For command line users, we’re providing a simple and familiar way for elevating your command prompt with Sudo for Windows. Simply enable Sudo within Windows developer settings and you can get started running elevated commands with Sudo right in your terminal. You can learn more about Sudo on GitHub.

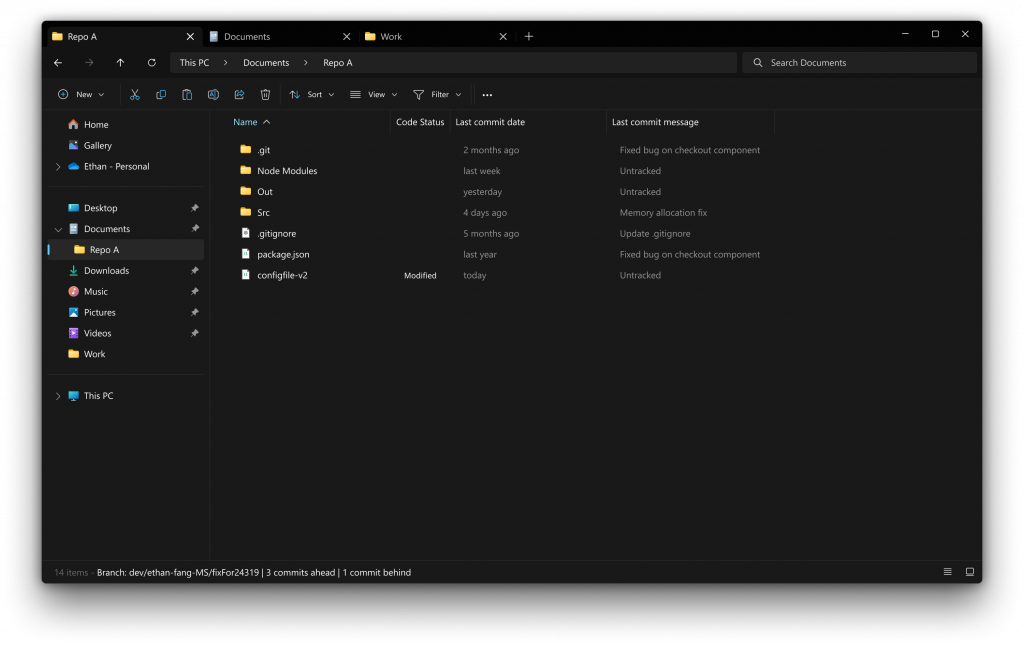

New Source code integration in File Explorer allows tracking commit messages and file status directly in File Explorer

File Explorer will provide even more power to developers with version control protocol integration (including Git). This allows developers to monitor data including file status, commit messages, and current branch directly from File Explorer. File Explorer has also gained the ability to compress to 7zip and TAR.

Continuing to innovate and accelerating development for Windows on Arm

The Arm developer ecosystem momentum continues to grow with updates to Visual Studio, .NET, and many key tools delivering Arm native versions. Windows is continuing to welcome more third-party Windows apps, middleware partners and Open-Source Software natively to Arm. Learn how to add Arm support for your apps.

- Visual Studio now includes Arm native SQL Server Developer Tools (SSDT), the #1 requested Arm native workload for VS. Learn more

- .NET 8 includes tons of performance improvements for Arm: Performance Improvements in .NET 8 – .NET Blog (microsoft.com)

- Unity games editor is now available in preview and will release to market with the next Unity update, allowing game developers to build, test and run Unity titles for Arm powered Windows devices.

- Blender Arm native builds is available in preview with the official builds with long term support expected to ship in June. Blender is the free and open source 3D creation suite. It supports the entirety of the 3D pipeline—modelling, rigging, animation, simulation, rendering, compositing and motion tracking, even video editing and game creation.

- Arm Native Docker tools for Windows are now available.

- Github Actions now has Arm64 runners on Windows. This is now available in private preview with a public preview expected in the coming months. You can apply to join here.

- GIMP adds Arm native with long term support from v3.0 will be available in May.

- Qt 6.8 release due in September will move Arm native to LTS for Windows.

Continuing investments in WinUI3 and WPF to help developers build rich, modern Windows applications

Windows is an open and versatile platform that supports a wide range of UI technologies. If you are looking to develop native Windows applications using our preferred UI development language, XAML, we recommend using either WinUI 3 or WPF.

WinUI 3 includes a modern native compositor and excels at media and graphics-focused consumer and commercial applications. WPF has a longer history and can take advantage of a deep ecosystem of commercial products as well as free and open source projects, many of which are focused on enterprise and data-intensive scenarios. We recommend you first consider WinUI 3, and if that meets your app’s needs, proceed with it for the most modern experience. Otherwise, WPF is an excellent choice. Both WinUI 3 and WPF can take advantage of all Windows has to offer, including the new features and APIs in the Windows App SDK, so you can feel confident in creating a modern application in either technology.

WinUI 3 and Windows App SDK now support native Maps control and .NET 8

With the latest updates to Windows App SDK 1.5+ we shipped several developer-requested features including support for .NET 8, with its faster startup, smaller footprint, and new runtime features. We’ve also brought to WinUI 3 one of the most requested features, the Maps control, powered by WebView 2 and Azure Maps. You can learn more about the controls and features in WinUI 3 in the interactive WinUI 3 Gallery App

Microsoft apps like Photos and File Explorer have migrated to WinUI3 along with developers like Apple (Apple TV, Apple Music, iCloud, Apple Devices) and Yair A (Files App), who are also adopting WinUI 3.

Windows 11 theme support makes it easy to modernize the look and feel of your WPF applications

WPF is popular, especially for data-heavy and enterprise apps. We listened to your feedback and are committed to continuing investments in WPF. With the latest updates to WPF, we have made it easier than ever to modernize the look and feel of your app through support for Windows 11 theming. We also improved integration with Windows by including a native FolderBrowserDialog and managed DWrite.

Developers, including Morgan Stanley and Reincubate, have created great apps that showcase what can be built using WPF.

Our updated Windows Dev Center includes information on both WinUI3 and WPF to help you make the best decision for your application.

Extend Windows apps into 3D space

As Windows transforms for the era of AI we are continuing to expand the reach of the platform including all the AI experiences developers create with the Windows Copilot Runtime. We are delivering Windows from the cloud with Windows 365 so apps can reach any device, anywhere. And we are introducing Windows experiences to new form factors beyond the PC.

For example, we are deepening our partnership with Meta to make Windows a first-class experience on Quest devices. And Windows can take advantage of Quest’s unique capabilities to extend Windows apps into 3D space. We call these Volumetric apps. Developers will have access to a volumetric API. This is just one of many ways to broaden your reach through the Windows ecosystem.

Building for the future of AI on Windows

This past year has been incredibly exciting as we reimagined the Windows PC in this new era of AI. But this is just the start of our journey. With the most efficient and performant Windows PCs ever built, powered by the game-changing NPU technology, and an OS with AI at its core, we have listened to your feedback and worked to make Windows the very best platform for developers.

We look forward to continuing to partner with you, our developer and MVP community, to bring innovation to our platform and tools, and enabling each of you to create future AI experiences that will empower every person on the planet to achieve more. We can’t wait to see what you will build next.

Editor’s note, May 21, 2024: This post was updated to reflect the latest product information on Snapdragon Dev Kit for Windows.

Disclaimers

1 Tested April 2024 using debug application for Windows Studio Effects workload comparing pre-release Copilot+ PC builds with Snapdragon Elite X 12 Core to Windows 11 PC with Intel 12th gen i7 configuration

2 Tested April 2024 using Phi SLM workload running 512-token prompt processing in a loop with default settings comparing pre-release Copilot+ PC builds with Snapdragon Elite X 12 Core and Snapdragon X Plus 10 core configurations (QNN build) to Windows 11 PC with NVIDIA 4080 GPU configuration (CUDA build).

3 Optimized for select languages (English, Chinese (simplified), French, German, Japanese, and Spanish.) Content-based and storage limitations apply. See [aka.ms/copilotpluspcs].

4 Optimized for English text prompts. See aka.ms/copilotpluspcs.

Source: Windows Blog

—